AstroSLAM: Robust Visual Localization in Orbit

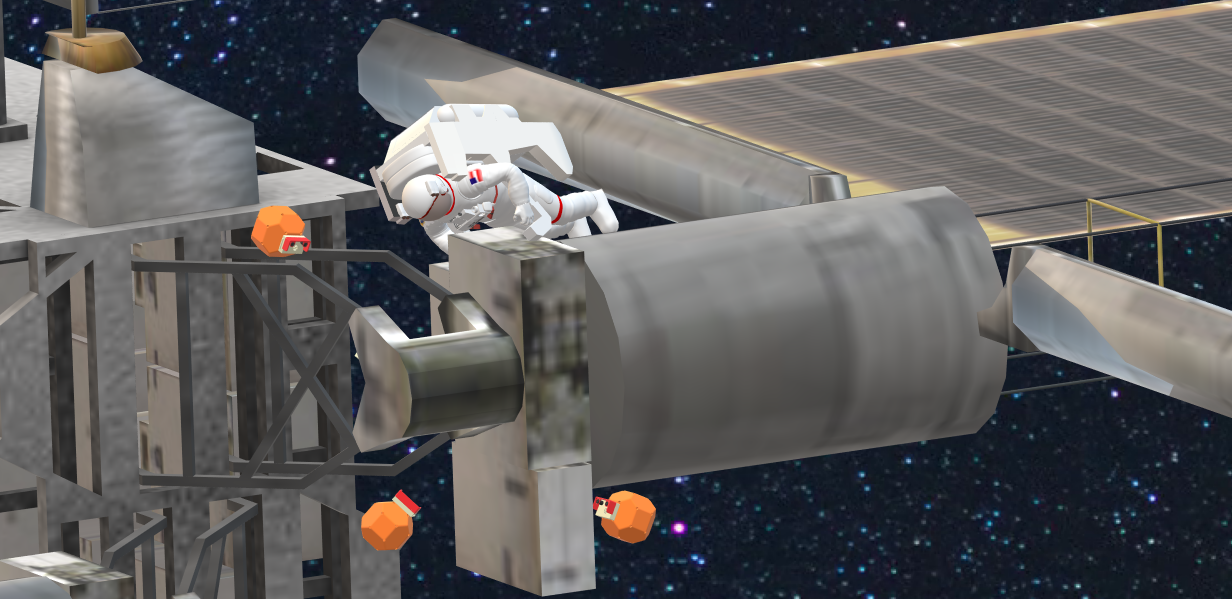

Space robotics technology is maturing fast enough that it is

time to start thinking how to utilize this

technology to support routine robotic operations in space in the

not-so-distant future. Recent technological

breakthroughs for ground robots, including perception and planning

algorithms, machine learning based pattern recognition, autonomy, new

computer hardware architectures (GPU, ASIC, FPGA), human-machine interfaces,

and dexterous manipulation, among many others, pave the way for similar

advancements in the area of robotic on-orbit operations to support failure

mitigation, large flexible structure assembly, on-orbit debris removal,

inspection, hardware upgrades, etc. Owing to the harsh environmental

conditions in space, only robots with increased robustness and high levels

of autonomy can perform these missions.

Key Research Objectives

This research will develop a novel visual perception, localization,

mapping, and planning algorithms that will enable new capabilities in

terms of situational awareness for space robots that can work alone or

alongside astronauts in orbit as “co-robots”. The proposed research plan

will develop novel automated feature extraction and matching algorithms

adapted to the challenging imaging conditions and motion constraints

imposed in space, so as to enable robust and reliable relative pose

estimation, 3D shape reconstruction and characterization of space objects.

We will develop innovative optimal planning and prediction methods matched

to these new perception capabilities that also

account for fuel usage and orbital motion constraints. The final outcome

will be the ability of astronauts and

space robots work together to enable:

– inspection, monitoring, and classification of resident space objects

(RSOs);

– maneuvering and proximity operations and docking, including salvage and

retrieval of malfunctioning

or tumbling spacecraft;

– servicing, construction, repair, upgrade, and refueling missions of

space assets in orbit.

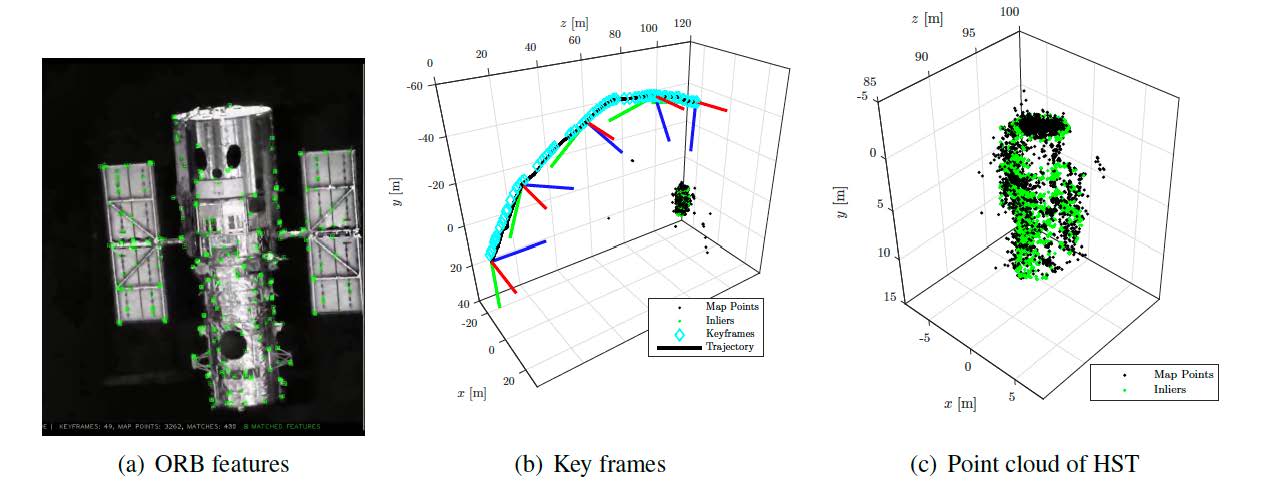

Deep NN Architectures for Automated Feature Detection and Matching in Space

Investigation of novel image features that maximally

match across multiple camera views, that demonstrate high

repeatability, robustness to large change in viewing angle with respect to

the RSO, and adaptation to a highly

collimated light source (e.g., the Sun), with a lack of typical atmospheric

scattering. These features will be

automatically generated using deep neural network (DNN) architectures. This

task will also exploit surface

reflection models to extract the relevant information from images for

successful feature extraction.

Full 4D Situational Awareness (4DSA) for Robots and Humans in Space

Provide space-time situational awareness for astronauts

in orbit by combining multiple camera streams using

a factor-graph optimization framework in order to generate pose and

predicted trajectories for all objects in the visual field. SLAM processes

“on the edge” will produce 3D representations used to match nearby

objects. We will make use of strong motion priors to enhance robustness

and reduce computational burden.

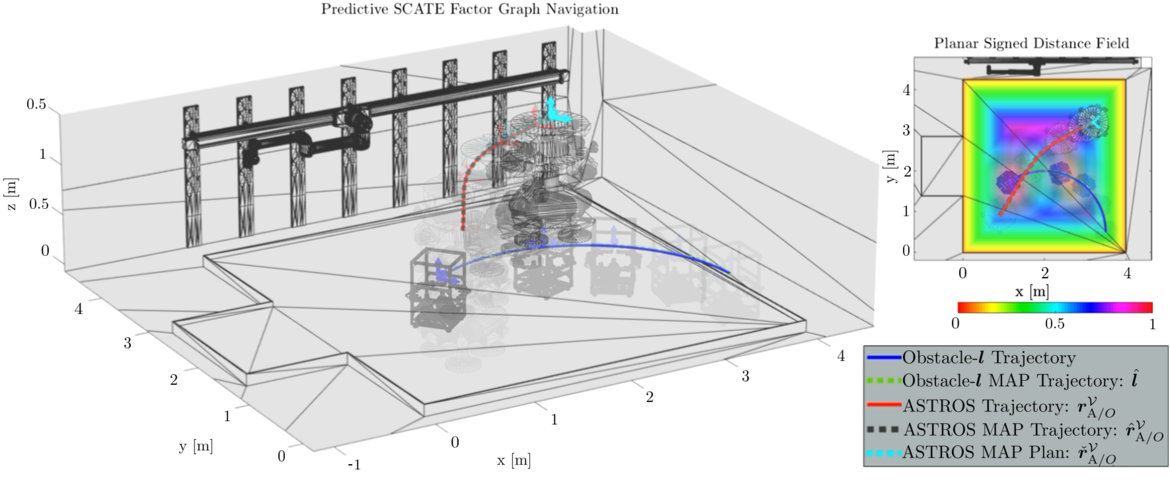

Multi-platform Kinodynamic Motion Planning for 4DSA

In order to provide continual 4D situational awareness

to astronauts we will develop methods to optimally move all agents

involved to the evolving task-needs. As input we assume the information

from the combined camera streams,

as well as additional task-specific visibility and fuel objectives to be

satisfied. We will develop algorithms to provide finite-horizon plans for

all platforms, satisfying task objectives as well as avoiding collisions.

Experimental Validation

The theoretical results will be validated using state-of-the-art ASTROS (Autonomous Spacecraft Testing of Robotic Operations in Space) and COSMOS (COntrol and Simulation of Multi-Spacecraft Operations in Space) experimental platforms at Georgia Tech that allow the simulation of realistic translational and rotational dynamics of spacecraft in 1-g environment.

Selected Publications and Presentations

- King-Smith, M., Dor, M., Valverde, A., and Tsiotras, P., “Nonlinear Dual Quaternion Control of Spacecraft-Manipulator Systems,” International Symposium on Artificial Intelligence, Robotics and Automation in Space, Pasadena, CA, Oct. 18–21, 2020.

- Driver, T., Dor, M., Skinner, K., and Tsiotras, P., “Shape Carving in Space: A Visual SLAM Approach to 3D Shape Reconstruction of a Small Celestial Body,” AIAA/AAS Astrodynamics Specialists Conference, South Lake Tahoe, CA, August 9-13, 2020.

- Ticozzi, L., Corinaldesi, G., Massari, M., Cavenago, F., King-Smith, M., and Tsiotras, P., “Coordinated Control of Spacecraft-Manipulator with Singularity Avoidance using Dual Quaternions,” 72nd International Astronautical Congress, Dubai, United Arab Emirates, Oct. 25–29, 2021.

- King-Smith, M., Tsiotras, P., and Dellaert, F., “Simultaneous Control and Trajectory Estimation for Collision Avoidance of Autonomous Robotic Spacecraft Systems,” International Conference on Robotics and Automation, Philadelphia, PA, May 23–27, 2022, pp.~257--264

- "Visual SLAM for Asteroid Relative Navigation", with M. Dor, T. Driver, and K. Skinner, Workshop on AI for Space (AI4Space), Conference on Computer Vision and Pattern Recognition, June 19–25, 2021 (virtual).

- Driver, T., Skinner, K., Dor, M., and Tsiotras, P., "AstroVision: Towards Autonomous Feature Detection and Description for Missions to Small Bodies Using Deep Learning", Acta Astronautica, Special Issue on Artificial Intelligence for Space (AI for Space), Vol. 210, pp. 393-410, Sept. 2023.

- Dor, M., Driver, T., Getzandanner, K., and Tsiotras, P., "AstroSLAM: Autonomous Monocular Navigation in the Vicinity of a Celestial Small Body -Theory and Experiments", International Journal of Robotics Research , Vol. 43, No. 11, pp. 1770-1808, 2024.

- "Seeing in the Dark: Feature Extraction and Matching in Low-Light Regions Using Deep Learning," with D. Kapu and T. Driver, 6th Space Imaging Workshop, Atlanta, GA, Oct. 7-9, 2024.

- "Line-Based Monocular SLAM for Spacecraft RPO," with I. Velentzas, 6th Space Imaging Workshop, Atlanta, GA, Oct. 7-9, 2024.

- King-Smith, M., and Tsiotras, P., "Robust Hybrid Global Dual Quaternion Pose Control of Spacecraft-Mounted Robotic Systems," AIAA Journal of Guidance, Control, and Dynamics, Vol. 47, No. 1, pp. 5-19, 2024.

- Florez, J.-D., Dor, M., and Tsiotras, P., "Initialization of Monocular Vision-based Navigation for Autonomous Agents Using Modified Structure from Small Motion," American Control Conference, Denver, CO, July 8-10, 2025.

- Xue, S., Dill, J., Pranay, P., Dellaert, F., Tsiotras, P., and Xu, D., "Neural Visibility Field for Active Mapping," Conference on Vision and Pattern Recognition, Seattle, WA, June 17-21, 2024, pp. 18122-18132.

- Velentzas, I., Dor, M., and Tsiotras, P., "A Robust Monocular SLAM approach for Spacecraft Rendezvous and Proximity Operations," AIAA SciTech, Orlando, FL, Jan.8-12, 2024, AIAA Paper 2024-0434.

- Velentzas I. and Tsiotras, P., "IRoLSD: Illumination Robust Line Segment Detection for Spacecraft Relative Navigation," Acta Astronautica, 2025 (to appear).

- Velentzas, I. G., Florez, J.-D., Bruckner, N., Dor, M., and Tsiotras, P., "SISIFOS: Specialized Illumination SImulator For Orbiting Spacecraft," AIAA SciTech, Orlando, FL, Jan. 12-16, 2026.

Sponsor

This research has been sponsored by NSF.

Contact

For more information contact Iason Velentzas.