A Framework for Bounded Rationality Autonomy Using Neuromorphic Decision and Action Models

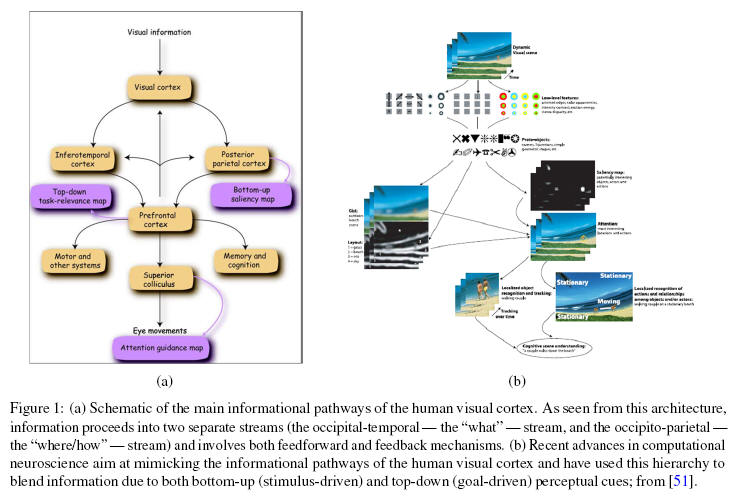

Bounded rational refers to the process of decision-making when the main goal is not necessarily an optimal decision, but rather one that meets the intelligent agent’s aspiration level. According to this “satisficing”(or bounded rationality) paradigm, human beings (as well as other mammals) tend to weight the benefits of reaching the “best” or “most informed” decision, versus the pitfalls associated with the time, energy and effort needed to collect all the information to reach that decision. In this research we propose bounded rationality perception and action models inspired by the perception and action mechanisms of the human brain. The main tenet of the proposed research builds on our belief that the current paradigm of perception in robotic agents is not relevant for most real-life problems, because of its inability to extract actionable information from raw sensory inputs. By extracting and operating on the actionable information, we will be able to develop decision-making models and algorithms for (expert) human-like perception and decision-making for autonomous systems performing demanding tasks at much shorter time scales and with fewer computational resources that what is currently available. To achieve this objective, we will draw inspiration from the cognitive models and the associated perception mechanisms of the human brain. Specifically, and guided by the perceptual architectures of the human visual cortex, we plan to develop probabilistic inference models that leverage attention-focused principles by operating on actionable data in a timely manner. Attention-driven mechanisms can bias decision-making and thus impose a prioritization of (re)action options, each of progressively higher fidelity. The incorporation of new information during this process can be dealt with by imposing (or taking advantage of) the natural spatio-temporal multi-scale structure of the collected data. We will couple these perceptual inputs with prior segmented dynamic motions (action primitives), similarly to the observed manner by which expert humans plan/react while executing challenging tasks. These primitives can be learned off-line and fine-tuned on-line and—when coupled with suitable perceptual inputs and the associated sensory inputs—can implement a human-like pre-emptive perception mechanism for reacting very quickly to external stimuli.

This project is in collaboration with S. Soatto and S. Schaal, and L. Itti. Please visit their websites for additional publications.

Sponsors

This project is supported by ONR.

Selected Publications

- Arslan O., and Tsiotras, P., "Dynamic

Programming Guided Exploration for Sampling-based Motion Planning

Algorithms,'' IEEE International Conference on Robotics and

Automation, Seattle, WA, May 26-30, 2015, pp. 4819--4826,

doi:10.1109/ICRA.2015.713986

- Hauer, F., Kundu, A., Rehg, J., and Tsiotras, P., "Multi-scale

Perception and Path Planning on Probabilistic Obstacle Maps,''

IEEE International Conference on Robotics and Automation,

Seattle, WA, May 26-30, 2015, pp. 4210--4215,

doi:10.1109/ICRA.2015.7139779

- Arslan, O., Theodorou E., and Tsiotras, P. "Information-Theoretic

Stochastic Optimal Control via Incremental Sampling-based Algorithms"

IEEE Symposium on Adaptive Dynamic Programming and Reinforcement

Learning, Orlando, FL, Dec. 9-12, 2014, pp. 71-78.

Movies