LEAD: Learning-Enabled Assistive Driving: Formal Assurances during Operation and Training

Current driver-assist systems are the epitomy

of CPS as they integrate the physical and digital domains by

incorporating a myriad of Electronic Control Units (ECUs) running

sophisticated planning and control algorithms, so much so that they

are aptly considered as “computers on wheels”. With the

proliferation of data-driven algorithms,

future self-driving vehicles will incorporate even more advanced

technologies, such as four-wheel independent steering,

drive-by-wire, brake-by-wire, perception modules, autonomous

navigation, lane-change, platooning, etc. and will be able to solve

many critical tasks such as perception, decision making, and

adaptive control in unstructured environments and move towards

complete autonomy. However, due to the fragility of present machine

learning algorithms in adapting to unseen environments or

unaccounted contextual situations, human intervention is still

necessary. What is not clear is how to align the decisions taken by

the vehicle’s autonomous or active safety control system with the

human’s intentions, goals, and skill set. This is paramount for

passenger vehicles operating in an “information-rich” world that

require the smooth and safe interaction between machine, computer,

and human at all levels: the driver, the vehicle, and the traffic.

While the modeling and control aspects at each level have received

their due attention in the literature, we argue that the interaction

between these three levels demarcates the cyber-physical nature of

the problem

Current driving autonomy solutions leverage advanced machine learning algorithms to perform challenging pattern recognition, planning and high-level decision tasks. In particular, reinforcement learning (RL) has proved successful in learning strategies and tasks, often out-performing humans. At the same time, these RL algorithms must align the agent’s goals with the human driver without sacrificing safety. Such interactions are vital for safe operation, yet they have not been deeply explored thus far.

There is currently a need to quantify the impact of the human driver within the autonomy loop, both from an individual experiential perspective, as well as in terms of safety. In this research, we propose to increase the performance and safety guarantees of deep neural network architectures operating within a feedback loop that includes the driver by: a) using redundant architectures that blend model-free and model-based processing pipelines, and b) by adding safety guarantees both during training and during execution by leveraging recent advances of formal methods for safety-critical applications..

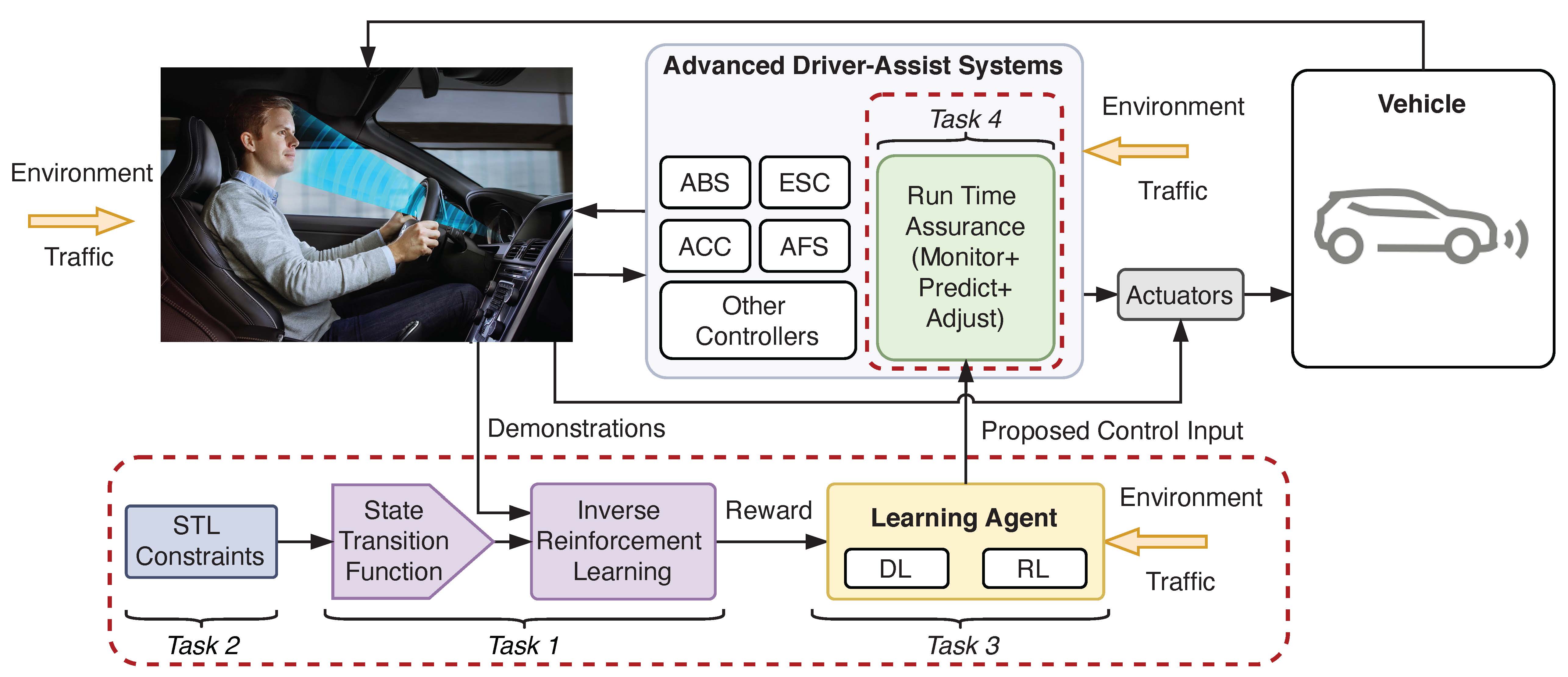

The proposed effort makes fundamental contributions to the challenging problem of safe operation of (deep) neural network-based learning architectures both during training and during execution. Our methodology is based on four key novel ingredients that guide our technical approach. The first ingredient is the introduction of a novel deep NN architecture that combines both model-based representation sensor measurements to enhance robustness during training. The second ingredient is the incorporation of signal temporal logic (STL) specifications within the proposed DNN architecture via the introduction of a bi-directional recurrent neural network (RNN) to ensure safety during training of learning-enabled advanced driver assist systems (ADAS), while also considering the driver’s habits and driving skills. The third ingredient is the development of new learning-from-demonstration techniques that enable population-based training of controllers to perform a context- or task-specific blending of individual, heterogeneous driver policies. Finally, we utilize efficient reachable set approximations to formulate an optimization-based runtime assurance (RTA) mechanism to ensure the satisfaction of the STL specifications during execution via the use of the theory of mixed monotonicity for efficient reachable set calculations of NNs.

The project is in collaboration with Sam Coogan and Matthew Gombolay.

Please also visit our VIP project website.

Sponsors

This project is supported by NSF.

Selected Publications

- TBA

- TBA